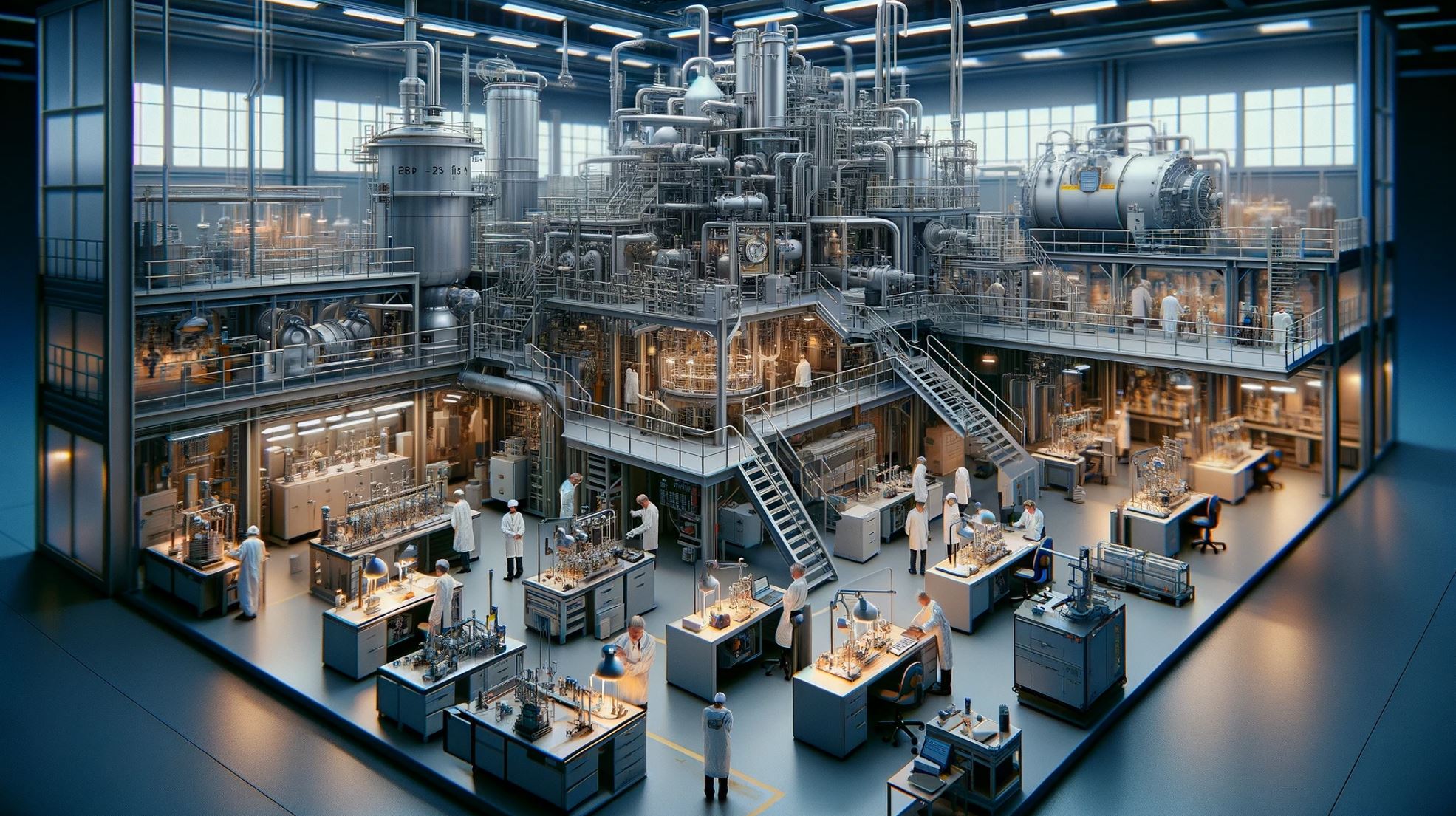

“Can We Believe the Simulation Results?” is a question across various fields, especially in engineerings and sciences. As we rely on simulations to predict outcomes, understand complex systems, and inform decision-making. Simulations, attempt to mimic real-world processes and scenarios.

Is the Model a Mini-Me of Reality?

Real-world physical processes can be incredibly complex, involving numerous variables and interactions. Simulating processes in their full complexity often demands enormous computational resources. Without simplification, Simulations might:

- Become computationally infeasible due to the volume of calculations and data processing required.

- Take an impractically long time to converge to a solution, or they may not converge at all, especially in cases involving iterative computational methods.

- Lead to more opportunities for errors, both in terms of coding the simulation and in interpreting the results.

- That data requirements (both in terms of quantity and quality) for a fully detailed simulation might exceed what is available or manageable.

On the other hand, impact of simplification on simulation results could:

- Lead to a loss of specific details.

- Be potential misinterpretation

- Be inadequate representation of critical parameters/phenomena

Simplification is often necessary in simulations to make computationally feasible. It enables the simulation to run but at the cost of reduced detail and potential accuracy. Understanding the nature and impact of simplifications is important for anyone interpreting and applying simulation results.

Garbage In, Garbage Out – GIGO

GIGO is a principle highlighting the importance of input quality in determining output quality. It suggests that if the input is flawed or inaccurate, such as a poorly formulated mathematical equation, the resulting output will also be flawed. This concept is crucial in systems where output accuracy is directly influenced by the quality of the input. Even with flawless logic in a program, the output will be unreliable if the input is incorrect. Key strategies to avoid GIGO

- Focus on collecting high-quality data (Quality over Quantity) ensuring accuracy, relevance, and completeness of the data.

- Have data verification processes to check the data for errors or inconsistencies before using it in the simulation.

- Cross-validate data against multiple sources.

- Compare data with historical records to ensure consistency.

- Use data from reliable and credible sources

- Consult with subject matter experts to validate the suitability and accuracy of the data.

Real World vs. Sim World – Double-Checking the Work

Verification and Validation (V&V) processes are critical in the development and application of simulation models in science and engineering. The difference between V&V;

- “Verification” is to ensure that the simulation correctly implements the intended model, i.e., it’s built right. It checks the accuracy of the code, algorithms, and numerical methods.

- “Validation” is to confirm that the simulation accurately represents the real-world system or phenomenon it’s intended to model, i.e., it’s the right build. It involves comparing simulation results with experimental or real-world data.

V&V aid in a deeper understanding of the model and its limitations, helping modelers and users to understand under what conditions the model is valid and where it might fail.

Matching simulation results with what actually happens in the real world can give confidence in the simulation for several key reasons as example

- Verify that the model accurately represents the major physical, or chemical processes it’s intended to simulate.

- Be confidence in future projections (important for predictive simulations, where).

- Helps validate set of assumptions, confirming that they are reasonable and correctly implemented.

- Reveal inconsistencies between simulated and actual outcomes where assumptions might be incorrect or oversimplified, guiding model refinement

- Identify potential failures and mitigated before they occur in practice.

What If Things Change?

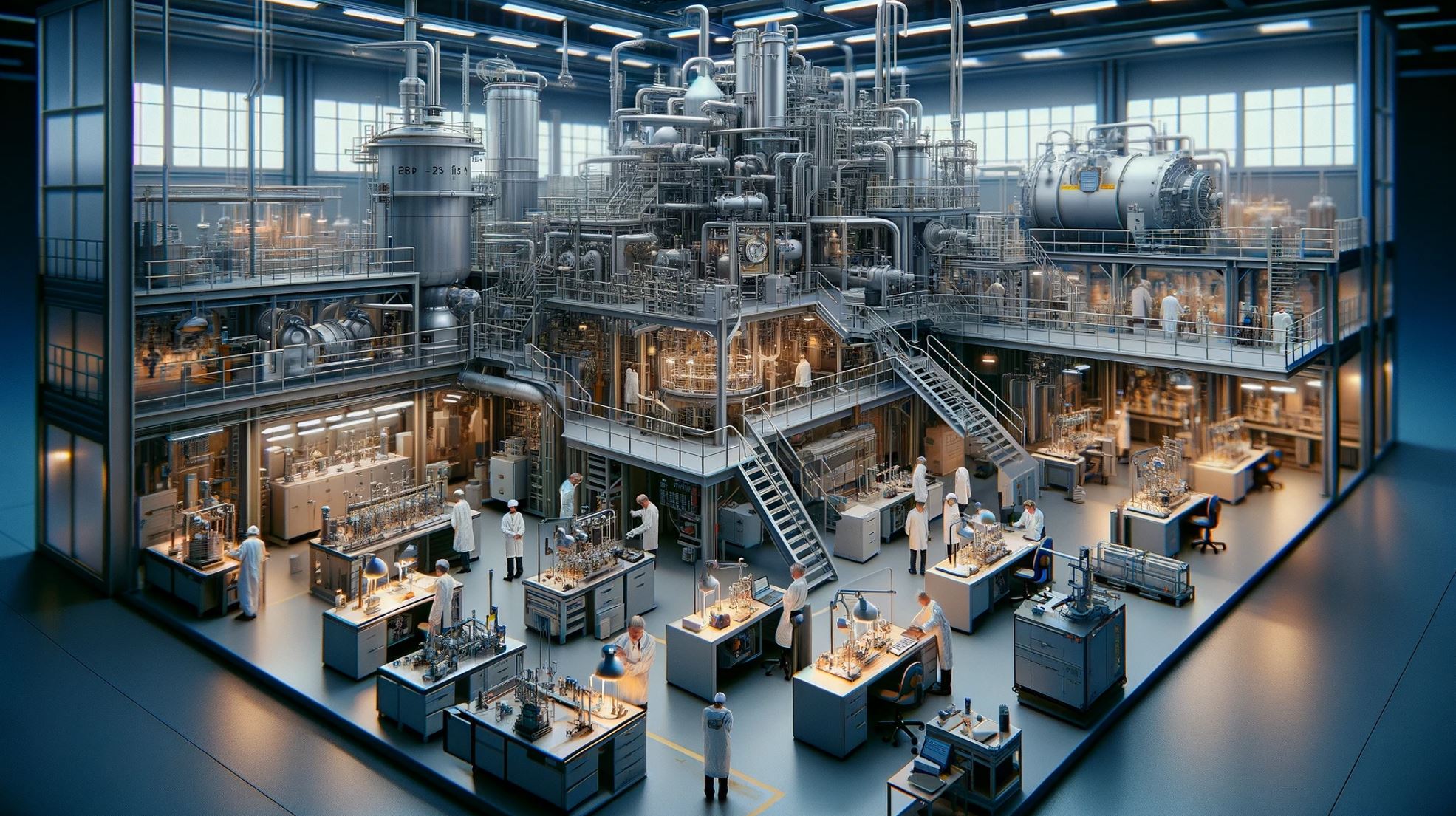

The point is about understanding the impact of changes in the inputs or conditions of a simulation on its results. “Uncertainty and Sensitivity Analysis” provides insights into how variations in input parameters affect the outcomes, enhancing the model’s credibility, guiding data collection and research priorities.

“Sensitivity Analysis” is a fancy term for asking, “What happens to our results if we tweak some of the starting points? Let’s break it down:

- It is like the “what-ifs” of simulation. It’s about poking and prodding your model to see how changes in one part (like input variables) affect the outcome. It answers questions like, “What if the cost of materials goes up? How does that impact our projected profits?”

- It’s like identifying critical variables in your model. Some inputs might have a huge impact on the outcome, while others might not matter much.

- It lets you explore with different scenarios.

- It can deal with uncertainty in simulations where the blind spots are, measuring them as best as you can, preparing for different possibilities.

- It can be guiding further research.

Show Your Work, Let Others Try

“Show Your Work, Let Others Try” in the context of simulations essentially means transparency and reproducibility. It’s like cooking with a recipe that others can follow and get the same delicious result. Others might refer to expert who have a high level of knowledge, skill, and experience in a particular domain or field. Example of importances of expert can:

- Ensures that simulation models accurately represent the real-world systems they are intended to mimic.

- Identify and resolve issues that may arise during the development and execution of simulations.

- Accurately interpret the results of simulations, understanding both their significance and their limitations.

- Be key to the ongoing refinement and improvement of simulation models, ensuring they remain relevant and accurate as new data and technologies emerge.

- Effectively communicate complex simulation concepts to stakeholders, policymakers, bridging the gap between technical understanding and practical application

Computer Power and Smarts

in the context of simulations refers to the hardware and software capabilities that are essential for running complex simulations. It’s like having a high-performance engine and a smart navigation system in a car. Here’s a somelook at how limitations can impact simulation.

Computational Power

- The level of detail and complexity that a simulation can achieve is often directly tied to the available computational power. High-fidelity simulationsn require computational resources to accurately model complex physical phenomena (aerodynamic analyses. etc.).

- The speed at which simulations can be run is also limited by computational power. This is crucial in fields where real-time simulation results are needed.

Algorithmic Accuracy

- Inaccurate algorithms can lead to errors that may compound over time, especially in long-duration simulations.

- The choice of “numerical methods” (like finite element analysis, computational fluid dynamics, etc.) is often a trade-off between computational efficiency and accuracy. they can introduce approximations that affect the simulation results.

Data Storage

- Large-scale simulations generate massive amounts of data, requiring extensive storage capacity

- Efficiently handling, processing, and analyzing the data from simulations is also a difficult, especially as the volume of data increases.

Software Limitations

- The capabilities of available simulation software can limit what can be modeled and how, including limitations in features, the range of physical phenomena that can be simulated, etc.

- Integrating different software tools or databases can be limited by compatibility issues

Hardware & Scalability Constraints

- The reliability and durability of hardware could be a limiting factor, especially in continuous or high-intensity simulation environments.

- If simulations become more detailed and encompass larger systems, the ability to scale up computationally could be limited by current technology.

Key takeaways

- Is the Model a Mini-Me of Reality? Accuracy depends on how closely the model mirrors real life, but it is needed to simplify.

- Garbage In, Garbage Out – GIGO: Poor input data leads to unreliable results.

- Real World vs. Sim World – Double-Checking the Work: Validating and Verifying simulations with real-world data ensures accuracy.

- What If Things Change?: Assessing model flexibility to changes improves reliability.

- Show Your Work, Let Others Try: Asking expert ensures accuracy, resolving issues, correctly interpreting results, transparency and practical applicability of simulations, and effectively communicating complex concepts to stakeholders.

- Computer Power and Smarts: Strong hardware and smart software are important for complex simulations

Disclaimer:

The views and opinions expressed in this Linkedin article are solely my own and do not represent the views or opinions of my current employer. This article is a personal reflection and does not involve any proprietary or confidential information from my current company. Any similarities in ideas or concepts presented in this article to my current company’s work are purely coincidental.